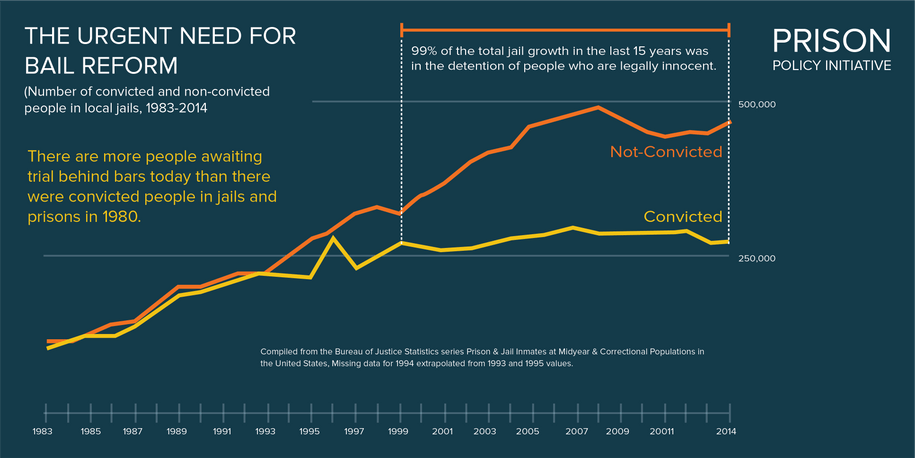

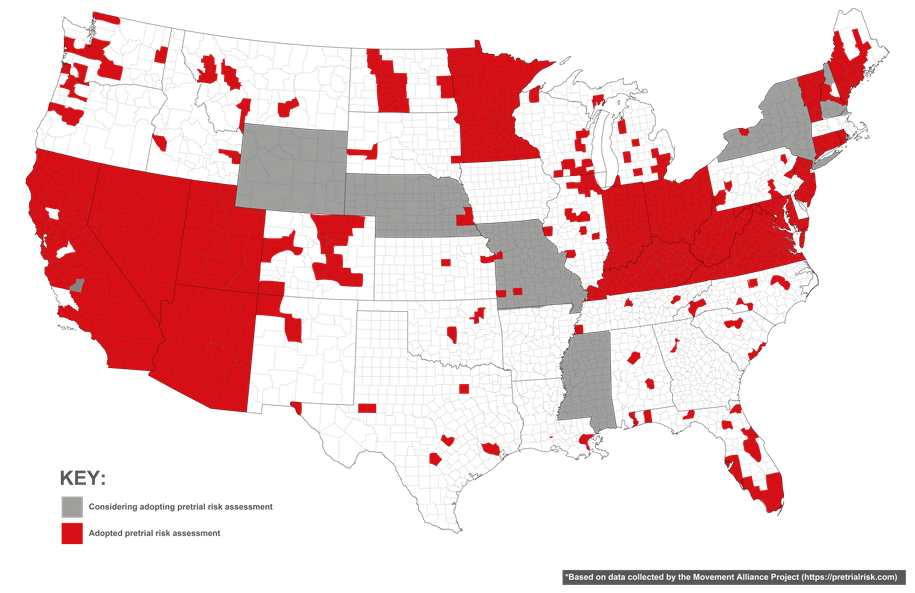

Pretrial risk assessments have emerged as an apparent solution to this crisis. The hope is that, by using large datasets to predict future arrest, these algorithms can help reduce the number of people incarcerated pretrial and lower racial disparities.

But to date, risk assessments haven’t delivered on that promise. Studies have shown that risk assessments aren’t that accurate, and they’re biased against Black people.

But risk assessments also represent a more fundamental problem — our tendency to use technology to monitor and control the behavior of marginalized groups, rather than to hold those in power accountable.

Mass pretrial incarceration is driven by judges who unlawfully send people to jail. Yet current algorithms focus exclusively on the behavior of people awaiting trial, rather than on the behavior of judges. An algorithm that "studies up" would make judges the focus of predictive modeling.

Our team created a judicial risk assessment tool.

The algorithm assigns judges a risk score based on their likelihood of failing to adhere to the Constitution by unlawfully incarcerating someone pretrial.

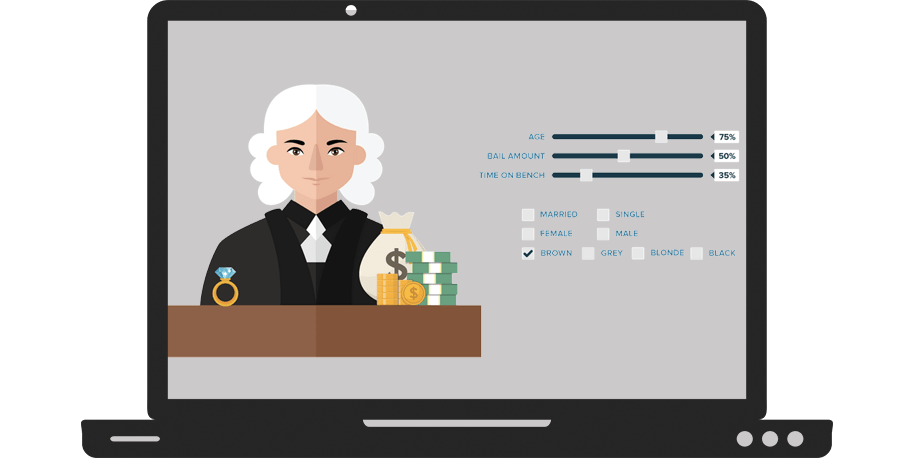

This tool relies on court records and demographic data. Like other risk assessments, age is the most predictive factor.

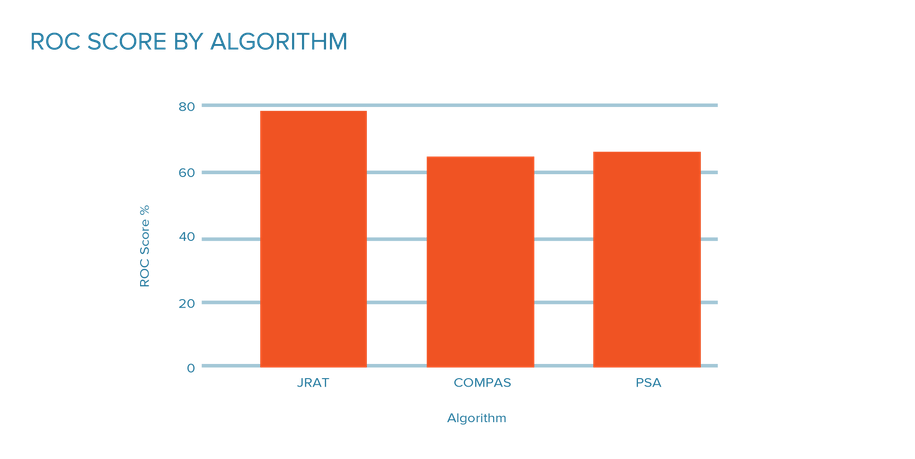

Our algorithm is both more transparent and accurate than pretrial risk assessments currently in use.

The judicial risk assessment tool is not intended for practical use in the courts.

But as a counter-narrative, tools like this reveal how technology often perpetuates and legitimizes discriminatory practices.

The judicial risk assessment flips the script, by subjecting those in power to technology that is typically reserved for only the poor and marginalized. In doing so, we aim to re-imagine the role that technology plays in addressing some of our toughest social challenges.